ARM Frequently Asked Questions: Summarizing

How do I control the number of decimals for summary means in Summary reports?

How do I control the number of decimals for summary means in Summary reports?

By default, ARM reports one more decimal of accuracy on means than the most precise data point entered in a data column. For example, if the data points of 36, 45, and 98.02 are entered in a data column, ARM reports three decimals of accuracy on the means.

There are two methods to control or force the number of decimals accuracy.

Method 1

The easiest method is to use the 'Force Number of Decimals Accuracy to' option on the General Summary Report Options window. (General Summary report options apply to all summary reports.) Using this option, you may enter the number of decimals to use on summary reports when there is no entry for an assessment column in the assessment data header Number of Decimals field (see next paragraph).

Caution: Forcing number of decimals to 0 or 1 in General Summary Report Options is very likely to hide important differences for small treatment means, such as yield values less than 25.

Method 2

To override a default set by method 1, enter the number of decimals of accuracy desired in the Number of Decimals plot data header for each data column. Enter a "0" to force means to print as whole number with no decimals reported.

The number of decimals field is usually located at the bottom of plot data headers so you may have to scroll the data header window down to display this line. If some older GDM study definitions, it is the second entry field included on the same line as the number of subsamples. The data header prompt may appear as:

# Subsamples, Dec.

If you cannot see a number of decimals field on the plot data header, then check whether this field is hidden in the current Assessment Data View. Select Tools - Options then Assessment Data View, and be sure that Visible is checked for the Number of Decimals field.

Why do some treatment means have the same mean comparison letter when the difference between means is larger than the reported LSD?

Why do some treatment means have the same mean comparison letter when the difference between means is larger than the reported LSD?

To use LSD as the mean comparison test in ARM (or to change mean comparison method):

- Select File - Print Reports from the ARM menu bar.

- Double-click on the Summary heading in the left column that lists available reports to expand the list of available summary reports.

- Click once on AOV Means Table in the left column so this choice is highlighted.

- Click the Options button below the list of available reports to display the AOV Summary Report Options.

- Click the down pointing arrow following the mean comparison test method. Select LSD to use this test method.

- The F1 help also provides additional information on the mean comparison test methods.

Following is information about LSD extracted from 'AOV Means Table Report Options' in ARM Help:

When using LSD to make an unplanned comparison of the highest and lowest mean in a trial with more than two treatments, the difference between treatments can be substantial even when there is no treatment effect. For example, when using a 5% significance level the actual significance level is larger than 5%. Some statisticians have determined that for a trial with three treatments the significance level is actually 13%, for six treatments the significance level is 40%, for 10 treatments the significance level is 60%, and for 20 treatments the significance level is 90%.

There is also an AOV Means Table Report option set that is making "protected" mean comparison tests: the option to run mean comparison test "Only when significant AOV treatment P(F)". When this option is active, ARM will not report any differences as significant when the treatment probability of F is greater than the mean comparison significance level, for example 0.10 (or 10%). The statement that "Mean comparisons performed only when AOV Treatment P(F) is significant at mean comparison OSL." is printed as a report footnote when this option is active.

Why do all treatment means have "a" as the mean comparison letter?

Why do all treatment means have "a" as the mean comparison letter?

Why are treatment means of transformed data different than means of the original untransformed data?

Why are treatment means of transformed data different than means of the original untransformed data?

The reason why the weighted means are different than the raw data means relates to the reason why the data must be transformed. A detailed explanation of the reason depends on which transformation was used.

Following is a description of the reason for this difference with data that has been transformed using square root. The description is from page 156 of the "Agricultural Experimentation Design and Analysis" book by Thomas M. Little and F. Jackson Hills, published 1978 by John Wiley and Sons. The discussion is regarding insect count data that was not normally distributed because many of the insect counts were very low - instead the data followed a Poisson distribution. The data was transformed and analyzed, means were calculated from the transformed values, then detransformed to the original units.

"The means obtained in this way are smaller than those obtained directly from the raw data because more weight is given to the smaller variates. This is as it should be, since in a Poisson distribution the smaller variates are measured with less sampling error than the larger ones."

Another way to say this is "The weighted means are more correct because the means are calculated from data where the original heterogeneity problem has been fixed."

What should I do if my data does not meet the assumptions of AOV?

What should I do if my data does not meet the assumptions of AOV?

- Normality – the distribution of the observations must be approximately normal, or bell-shaped. Simply put, most of observations will be relatively near the mean, so we can reasonably expect to not have major outliers. The measurements to test this assumption in ARM, which can be included on reports by selecting the respective AOV Means Table option, are:

- Skewness – a measure of how symmetrical the observed values are.

- Kurtosis – a measure of how ‘peaked’ the data is (how ‘flat’ or ‘pointed’ relative to the normal distribution).

- Homogeneity of variances – the variability of observations are approximately equal regardless of which treatment was applied. The mean comparison tests rely heavily on this assumption, since the tests are carried out using a common variance from all treatments. (Use “Bartlett’s Homogeneity of Variance” option on AOV Report Options to test for this assumption).

- The Box-Whisker graph is a great tool to visually investigate and demonstrate this concept. Boxes that are of similar height and shape across all treatments in a data column indicate homogenous variances.

- Apply automatic transformation – ARM can apply one of the following automatic data correction transformations:

- Square root (AS) – often used with ‘count’ rating types (e.g. # of insects per plant).

- Arcsine square root percent (AA) – used with counts taken as percentages/proportions.

- Log (AL) – can only be used for non-negative numbers.

- Exclude treatments – ARM can exclude a treatment from the analysis, most often to fix non-homogeneity of variances. Although this may not be desirable, as all treatments are included in the study for a reason, this option basically makes the best of a tough situation. Unless something is done to meet the assumptions of AOV, the entire study cannot be evaluated using AOV.

- ARM can apply the EC action code to exclude the check treatment. EC is often used for data where the untreated check plots are counts of weeds, while the other plots are a “% control” type of rating (thus a different rating type).

- ARM can apply the EC action code to exclude the check treatment. EC is often used for data where the untreated check plots are counts of weeds, while the other plots are a “% control” type of rating (thus a different rating type).

- Exclude Replicates – ARM can exclude a replicate from the analysis as well in order to meet the assumptions. Again, this is not desirable, but in certain cases is necessary in order to perform any analysis in ARM.

How does ARM calculate the Standard Deviation for the AOV Means Table report?

How does ARM calculate the Standard Deviation for the AOV Means Table report?

By definition, standard deviation is the square root of variance. The standard deviation reported by ARM is the square root of the Error Mean Square (EMS) from the AOV table. When trial data is analyzed as a randomized complete block (RCB), ARM performs a two way analysis of variance (AOV). As a result, both the treatment and the replicate sum of squares are partitioned from the error sum of squares.

Sometimes a user attempts to verify the standard deviation calculated in ARM by using a spreadsheet or a scientific calculator. It is important to note that the standard deviation calculated by a spreadsheet or a scientific calculator is typically based on a one way AOV. The EMS (and thus the standard deviation) calculated for a one way ANOVA is different than that calculated for a two way AOV, so standard deviation calculated by ARM for a RCB experimental design will almost always be different than the standard deviation calculated using a spreadsheet or scientific calculator. In other words, the variance (EMS) is not the same when calculated for a two way ANOVA as a one way ANOVA.

ARM performs a one way AOV only when analyzing trial data as a completely random experimental design. For a completely random design, the standard deviation calculated by Excel or a scientific calculator will match the one calculated by ARM.

What is the difference between Bartlett's and Levene's tests of homogeneity of variance?

What is the difference between Bartlett's and Levene's tests of homogeneity of variance?

When the data is reasonably normally-distributed, Levene's and Bartlett's tests are comparable, with a slight edge to Bartlett's. (Use the Skewness and Kurtosis statistics to test for normality.)

- If you have strong evidence that your data do in fact come from a normal, or nearly normal, distribution, then Bartlett's test has more statistical power.

- Thus when the data is non-normal or skewed, Levene's test provides better results.

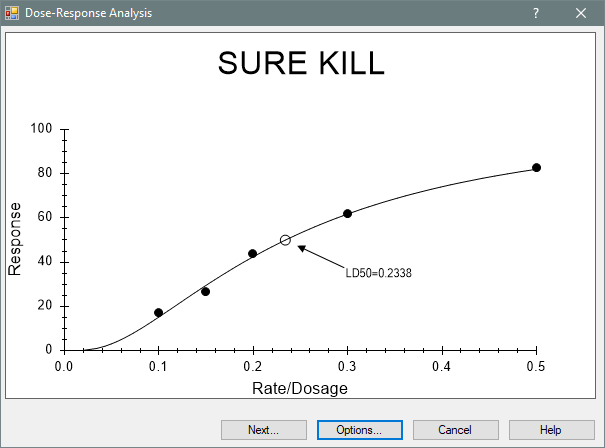

Dose-Response Analysis Report

Dose-Response Analysis Report